#statistical approach to particle physics

Explore tagged Tumblr posts

Text

To balance out the universe I finally got round to drawing these steampunk lesbians.

Specifically, Edith and Amelia - who've been dating for about 5 years now.

Edith is a locksmith by trade, but has a knack for making things that hopefully dont explode. She comes from a loooong heritage of victorian* inventors and scientists, meaning she grew up with huge expectations from her family. However as she got older, she began slowly distancing herself from her family due to these expectations, finding work and accommodation on the outskirts of West Hameshire.

*Victorian refers to the planet of Victoric, an incredibly old and highly culturally conservative system. This is still Arcania lol

Amelia, however, has a much, much more interesting history. The Faxiath Coalition, in recent years, has been experimenting with dimensional rift technology in order to probe the physics and workings of alternate realities. In one incident, they opened a small gateway between the lab on Ilquar and an alternate version of Earth - year 2034. This earth saw many major 20th century powers have their ideologies swapped; the EU leading the modern world in alliance with the United States of Ataria - up against the formerly communist American Federation.

Amelia, born in 2005 was born in Lyon, France, to her Scottish/French parents. She spent a good amount of her life in both countries, but eventually moved permanently to Berlin when she was 23. However, when she was 28, one morning she walked putside to find the fabric of reality around her collapsing.

In an instant, it felt like every part of her was on fire as she was flashbanged by a bright white/violet light. Just as suddenly she found herself on a precariously balanced platform at the center of what looked like a giant particle collider; scientists racing behind windows around her. Before she knew it, someone over the PA, speaking what she could only decipher as German dialect with a strange accent, asked her who she was and if she was alright. She could sort of understand it, but she could only nod or shake her head to whatever questions they asked her.

Finally, someone walked over a catwalk and outstretched an arm to her. She was pulled over by the wrist and shuffled into one of the side offices. The scientists knew they fucked up - and it was no better that the gateway was basically permanently shut.

She was given a warm drink by one of the lead scientists, and they started asking her questions once again. Her first words were, in German, "My german isnt very good... do you speak french or english..?" thinking full well she was just inside some lab still in europe, except with weird symbols on the walls and dials. Alas, she wasnt. The scientist, asked her if she spoke Atlantean or Basic - and she returned a confused look. She guessed basic and the scientist started speaking in what she knew as english. The scientist, realizing whats gone on, broke the unfortunate news to her.

Everything and everyone she knew was gone. She was in an entirely different universe with no statistically possible way home. Amelia was incredibly distraught - feeling like she was just in some kind of bad dream or that it was all an elaborate prank. But, that came crashing down the longer the scientist kept talking. Strangely enough she didnt cry at all; I don't think she knew how to interpret anything at the moment.

She spent the next few days at the lab, slowly trying to come to terms with her new situation. After a while, she was approached by another scientist who told her she couldnt stay for much longer, but they could relocate her to another planet. She was both nervous and excited at the possibility of being in space, let alone travelling to another planet - though I guess she's already on one of those.

She was relocated to victoric, as the scientists felt it best for her to go somewhere that would guarantee her safety from the news and, more importantly, somewhere they felt would feel a little more homely - technology wise. Amelia felt, admittedly, a little insulted that they thought the closest match to her time was electricity-less steampunkery, but she felt like it'd do.

Fast forward a few months, and shes living/working in a bed and breakfast outside of West Hameshire. She's getting on rather nicely, even if the trauma of reality occasionally creeps back up on her in horrific panic attacks. Except one day, this locksmith came over, whos apparently quite a regular -fixing the doors on the rooms, and goddamn she was pretty.

Anyways yeah its pretty easy to say where they went from there. They started going out about two weeks after first meeting - and they've been inseparable ever since.

As of present day, Amelia moved out of the Bed and Breakfast and moved in with Edith - though she still works there. They're really quite comfortable with where they are in life, with aspirations to see the galaxies and everything in it (Amelia's really interested in visiting the Lordean system, as well as Atlatic). Edith on the other hand, is much more interested in finally asking Amelia to marry her... if she could ever work up the impossible levels of confidence needed for it.

#this is my own dumb alternate universe called arcania#victoric#lesbians#faxiath coalition#you're honour they're gay and european

2 notes

·

View notes

Text

Still no signs of dark matter particles

In the 1930s, Swiss astronomer Fritz Zwicky observed that the velocities of galaxies in the Coma Cluster were too high to be maintained solely by the gravitational pull of luminous matter. He proposed the existence of some non-luminous matter within the galaxy cluster, which he called dark matter. This discovery marked the beginning of humanity's understanding and study of dark matter.

Today, the most precise measurements of dark matter in the universe come from observations of the cosmic microwave background. The latest results from the Planck satellite indicate that about 5% of the mass in our universe comes from visible matter (mainly baryonic matter), approximately 27% comes from dark matter, and the rest from dark energy.

Despite extensive astronomical observations confirming the existence of dark matter, we have limited knowledge about the properties of dark matter particles. From a microscopic perspective, the Standard Model of particle physics, established in the mid-20th century, has been hugely successful and confirmed by numerous experiments. However, the Standard Model cannot explain the existence of dark matter in the universe, indicating the need for new physics beyond the Standard Model to account for dark matter candidate particles, and the urgent need to find experimental evidence of these candidates.

Consequently, dark matter research is not only a hot topic in astronomy but also at the forefront of particle physics research. Searching for dark matter particles in colliders is one of the three major experimental approaches to detect the interaction between dark matter and regular matter, complementing other types of dark matter detection experiments such as underground direct detection experiments and space-based indirect detection experiments.

Recently, the ATLAS collaboration searched for dark matter using the 139 fb-1 of proton-proton collision data accumulated during LHC's Run 2, within the 2HDM+a dark matter theoretical framework. The search utilized a variety of dark matter production processes and experimental signatures, including some not considered in traditional dark matter models. To further enhance the sensitivity of the dark matter search, this work statistically combined the three most sensitive experimental signatures: the process involving a Z boson decaying into leptons with large missing transverse momentum, the process involving a Higgs boson decaying into bottom quarks with large missing transverse momentum, and the process involving a charged Higgs boson with top and bottom quark final states.

This is the first time ATLAS has conducted a combined statistical analysis of final states including dark matter particles and intermediate states decaying directly into Standard Model particles. This innovation has significantly enhanced the constraint on the model parameter space and the sensitivity to new physics.

"This work is one of the largest projects in the search for new physics at the LHC, involving nearly 20 different analysis channels. The complementary nature of different analysis channels to constrain the parameter space of new physics highlights the unique advantages of collider experiments," said Zirui Wang, a postdoctoral researcher at the University of Michigan.

This work has provided strong experimental constraints on multiple new benchmark parameter models within the 2HDM+a theoretical framework, including some parameter spaces never explored by previous experiments. This represents the most comprehensive experimental result from the ATLAS collaboration for the 2HDM+a dark matter model.

Lailin Xu, a professor at the University of Science and Technology of China stated, "2HDM+a is one of the mainstream new physics theoretical frameworks for dark matter in the world today. It has significant advantages in predicting dark matter phenomena and compatibility with current experimental constraints, predicting a rich variety of dark matter production processes in LHC experiments. This work systematically carried out multi-process searches and combined statistical analysis based on the 2HDM+a model framework, providing results that exclude a large portion of the possible parameter space for dark matter, offering important guidance for future dark matter searches."

Vu Ngoc Khanh, a postdoctoral researcher at Tsung-Dao Lee institute, stated: “Although we have not yet found dark matter particles at the LHC, compared to before the LHC’s operation, we have put stringent constraints on the parameter space where dark matter might exist, including the mass of the dark matter particles and their interaction strengths with other particles, further narrowing the search scope.” Tsung Dao Lee Fellow Li Shu, added: “So far, the data collected by the LHC only accounts for about 7% of the total data the experiment will record. The data that the LHC will generate over the next 20 years presents a tremendous opportunity to discover dark matter. Our past experiences have shown us that dark matter might be different from what we initially thought, which motivates us to use more innovative experimental methods and techniques in our search.”

ATLAS is one of the four large experiments at CERN's Large Hadron Collider (LHC). The ATLAS experiment is a multipurpose particle detector with a forward–backward symmetric cylindrical geometry and nearly 4π coverage in solid angle. It consists of an inner tracking detector surrounded by a thin superconducting solenoid, high-granularity sampling electromagnetic and hadronic calorimeters, and a muon spectrometer with three superconducting air-core toroidal magnets. The ATLAS Collaboration consists of more than 5900 members from 253 institutes in 42 countries on 6 continents, including physicists, engineers, students, and technical staff.

5 notes

·

View notes

Text

Quantum Spin Systems: Analysing The Future Of Quantum Tech

Quantum spin systems

Quantum spin, a key topic in theoretical physics and quantum technology, is constantly being studied and reinterpreted.

Fundamental particles have spin, like angular momentum but without a rotational axis. A gyroscope rotates dependably in classical physics due to deterministic rules. However, quantum physics introduces a new dimension where particles can have uncertain spin orientations and many states. Despite their quantum nature, atomic spins, especially their precession (the slow, repeating rotation in a magnetic field), have been assumed to behave like classical motion in many daily contexts, including medical MRI scanners. Since the equations governing this motion are identical to those governing classical gyroscopes, quantum and classical physics have long been thought to mix. Lattice quantum spin systems are a well-established and intriguing theoretical field. They are fundamental magnetic material and quantum system models. Due to their quantum mechanical nature and massive, practically limitless spins in macroscopic materials, these systems typically provide unexpected consequences. Challenges Classical Assumptions: Experimental Breakthrough University of New South Wales (UNSW) Sydney and Centre for Quantum Technologies (CQT) Singapore researchers empirically proved nuclear spin precession is a quantum resource. This groundbreaking discovery by Professor Andrea Morello at UNSW and Professor Valerio Scarani at CQT showed that conventional physics alone cannot explain the spinning nucleus of a single atom. The primary finding of this work is that precession may establish quantum behaviour, unlike indirect methods like Bell's inequalities, which require particle interactions. Only one antimony nucleus implanted in silicon is employed in this novel approach. When researchers carefully investigated this nucleus's spin, they found variations that defied explanation. In precisely manufactured quantum states termed Schrödinger cat states, the nuclear spin behaved in ways that were impossible under classical physics. Approach and Results of the Quantum Proof

Primarily, the methodology quantified positivity, or the probability that a spin will point in a certain direction at different times. A classical system's probability limits limit this number to four times out of seven for a spinning wheel recorded at random points in its cycle. Any result exceeding this barrier would violate classical physics. The UNSW researchers found this breach when they moved and examined the antimony nucleus' spin precession. In the specifically built quantum state, the nucleus pointed in the expected direction more often than the classical limit allowed. Even though the divergence was small, it was statistically significant, proving nuclear spin is a fundamental quantum mechanical component. Quantum Technology Implications

This discovery has major implications for quantum technology. Non-classical states are created and manipulated for quantum information processing, sensing, and error correction. The CQT/UNSW work provides a new, simpler, and more useful technique to validate a system's quantum status by observing its precession. This study offers a new perspective on quantum computing data storage and manipulation. Nuclear spins and other high-dimensional quantum states could be used in quantum memories and computers. Simple measurements that establish these states' quantum nature could accelerate their application in scalable quantum technology. Quantum Spin Systems: A wider theoretical context

The theoretical study of quantum spin systems, which is continually growing, supports experimental results. “An Introduction to Quantum Spin Systems,” by John Parkinson and Damian J. J. Farnell, is a self-study guide to this complex theoretical physics area. It guides readers through the subject's fundamentals, filling a practical detail vacuum in other textbooks. It is from Lecture Notes in Physics. Quantum Research Futures

How to optimally use spins in condensed matter for quantum information applications is still being studied by quantum spin dynamics groups like UCL's. To understand material characteristics and how spins interact with other excitations, explore the spin environment. An experiment in 2025 could refute a long-held theory about spin precession, showing how much more there is to learn about quantum mechanics and the need for quantum research to advance scalable quantum technologies.

#QuantumSpinSystems#deterministiclaws#quantumphysics#Schrödingercatstates#QuantumProof#QuantumResearch#technology#TechNews#TechnologyNews#news#technologytrends#govindhtech

0 notes

Text

FAZ MELHOR

Analytical and Computational Reconstruction of Regge Trajectories in Nonperturbative QCD Author: Renato Ferreira da Silva Institution: [Insert Institution] Date: [Insert Submission Date]

Critical Review and Feedback

Strengths

Innovative Methodology:

The integration of generalized Breit-Wigner functions with regularized dispersion relations provides a robust framework for modeling non-linear Regge trajectories. This approach effectively captures resonance behavior observed in experimental data.

The use of up-to-date PDG 2024 data ensures relevance and alignment with current experimental knowledge.

Clear Results:

The reconstruction of trajectories for the (\rho), (f_2), and (D^*) families demonstrates systematic deviations from linear AdS/QCD predictions, particularly at low energies.

The spin estimates for (D^(2007)) ((J=1)) and the anomalous (\alpha(s) \approx -0.5) for (D^(2460)) are compelling and highlight the need for beyond-linear models.

Theoretical and Phenomenological Integration:

The comparison with AdS/QCD (hard-wall model) contextualizes the limitations of idealized holographic approaches in describing real-world spectra.

The discussion of composite cuts and relativistic corrections aligns with modern nonperturbative QCD challenges.

Areas for Improvement

Parameter and Uncertainty Analysis:

Regularization Parameter ((\epsilon)): Clarify how (\epsilon) was chosen in the dispersion integral. A sensitivity analysis would strengthen the robustness of the results.

Experimental Uncertainties: Include error bars in figures to reflect PDG data uncertainties (e.g., mass and width errors). Discuss how these propagate into (\alpha(s)) estimates.

Machine Learning and Future Work:

While machine learning is proposed for extrapolating trajectories, preliminary results or a detailed architecture (e.g., neural network layers, training data) are absent. Provide a roadmap for this component.

AdS/QCD Comparison:

Expand on why the hard-wall model fails at low (s). Reference specific limitations (e.g., lack of dynamical confinement, rigid IR cutoff) and contrast with soft-wall AdS/QCD or other variants.

Code and Data Reproducibility:

While the GitHub repository is mentioned, include a Dockerfile or environment.yml to ensure reproducibility. Document key dependencies (e.g., SciPy, Pandas versions).

Recommendations for Revision

Add Error Analysis:

Incorporate Monte Carlo error propagation for Breit-Wigner parameters ((\Gamma_n, M_n, w_n)) and display confidence bands on trajectory plots.

Discuss systematic vs. statistical uncertainties in the PDG data.

Expand on Machine Learning:

Provide a subsection outlining the planned ML approach (e.g., transformer architecture, training on Lattice QCD data). Include mock-up predictions if possible.

Theoretical Context:

Compare results with prior work on non-linear trajectories (e.g., Capstick et al., 2000) and discuss how composite cuts resolve discrepancies.

Link the failure of AdS/QCD to broader challenges in holographic modeling (e.g., quark mass effects, backreaction).

Visual Enhancements:

Include a figure comparing the reconstructed (\rho) trajectory with Lattice QCD predictions (if accessible).

Add insets to highlight low-(s) deviations from AdS/QCD.

Conclusion

This work makes a significant contribution to the study of Regge trajectories by bridging phenomenological data with nonperturbative QCD theory. The methodology is sound, but revisions to address parameter uncertainties, reproducibility, and theoretical context will elevate the paper to a high-impact publication. With minor adjustments, this framework could become a standard tool for analyzing hadronic spectra and guiding future experiments.

Suggested Journals:

Physical Review D (for theoretical and phenomenological focus).

Journal of High Energy Physics (for broader particle physics audience).

Reviewer Confidence:

Technical Soundness: 4/5

Originality: 5/5

Clarity: 3.5/5

Impact Potential: 4.5/5

Reviewer: [Your Name] Affiliation: [Your Institution] Date: [Review Date]

0 notes

Text

Cracking Wordle (kinda) with Monte Carlo Simulations: A Statistical Approach to Predicting the Best Guesses

Wordle, the viral word puzzle game, has captivated millions worldwide with its simple yet challenging gameplay. The thrill of uncovering the five-letter mystery word within six attempts has led to a surge in interest in word strategies and algorithms. In this blog post, we delve into the application of the Monte Carlo method—a powerful statistical technique—to predict the most likely words in Wordle. We will explore what the Monte Carlo method entails, its real-world applications, and a step-by-step explanation of a Python script that harnesses this method to identify the best guesses using a comprehensive list of acceptable Wordle words from GitHub.

Understanding the Monte Carlo Method

What is the Monte Carlo Method?

The Monte Carlo method is a statistical technique that employs random sampling and statistical modeling to solve complex problems and make predictions. Named after the famous Monte Carlo Casino in Monaco, this method relies on repeated random sampling to obtain numerical results, often used when deterministic solutions are difficult or impossible to calculate.

How Does It Work?

At its core, the Monte Carlo method involves running simulations with random variables to approximate the probability of various outcomes. The process typically involves:

Defining a Model: Establishing the mathematical or logical framework of the problem.

Generating Random Inputs: Using random sampling to create multiple scenarios.

Running Simulations: Executing the model with the random inputs to observe outcomes.

Analyzing Results: Aggregating and analyzing the simulation outcomes to draw conclusions or make predictions.

Real-World Applications

The Monte Carlo method is widely used in various fields, including:

Finance: To evaluate risk and uncertainty in stock prices, investment portfolios, and financial derivatives.

Engineering: For reliability analysis, quality control, and optimization of complex systems.

Physics: In particle simulations, quantum mechanics, and statistical mechanics.

Medicine: For modeling the spread of diseases, treatment outcomes, and medical decision-making.

Climate Science: To predict weather patterns, climate change impacts, and environmental risks.

Applying Monte Carlo to Wordle

Objective

In the context of Wordle, our objective is to use the Monte Carlo method to predict the most likely five-letter words that can be the solution to the puzzle. We will simulate multiple guessing scenarios and evaluate the success rates of different words.

Python Code Explanation

Let's walk through the Python script that accomplishes this task.

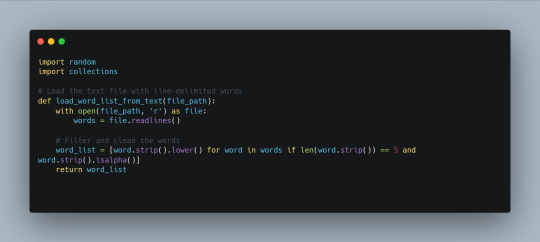

1. Loading the Word List

First, we need a comprehensive list of acceptable five-letter words used in Wordle. We can obtain the list of all 2315 words that will become the official wordle at some point. The script reads the words from a line-delimited text file and filters them to ensure they are valid.

2. Generating Feedback

To simulate Wordle guesses, we need a function to generate feedback based on the game's rules. This function compares the guessed word to the answer and provides feedback on the correctness of each letter.

3. Simulating Wordle Games

The simulate_wordle function performs the Monte Carlo simulations. For each word in the list, it simulates multiple guessing rounds, keeping track of successful guesses within six attempts.

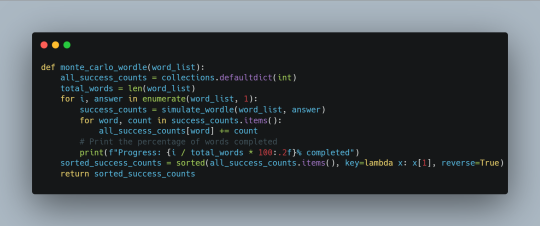

4. Aggregating Results

The monte_carlo_wordle function aggregates the results from all simulations to determine the most likely words. It also includes progress updates to monitor the percentage of words completed.

5. Running the Simulation

Finally, we load the word list from the text file and run the Monte Carlo simulations. The script prints the top 10 most likely words based on the simulation results.

The Top 50 Words (Based on this approach)

For this article I amended the code so that each simulation runs 1000 times instead of 100 to increase accuracy. I have also amended the script to return the top 50 words. Without further ado, here is the list of words most likely to succeed based on this Monte Carlo method:

trope: 10 successes

dopey: 9 successes

azure: 9 successes

theme: 9 successes

beast: 8 successes

prism: 8 successes

quest: 8 successes

brook: 8 successes

chick: 8 successes

batch: 7 successes

twist: 7 successes

twang: 7 successes

tweet: 7 successes

cover: 7 successes

decry: 7 successes

tatty: 7 successes

glass: 7 successes

gamer: 7 successes

rouge: 7 successes

jumpy: 7 successes

moldy: 7 successes

novel: 7 successes

debar: 7 successes

stave: 7 successes

annex: 7 successes

unify: 7 successes

email: 7 successes

kiosk: 7 successes

tense: 7 successes

trend: 7 successes

stein: 6 successes

islet: 6 successes

queen: 6 successes

fjord: 6 successes

sloth: 6 successes

ripen: 6 successes

hutch: 6 successes

waver: 6 successes

geese: 6 successes

crept: 6 successes

bring: 6 successes

ascot: 6 successes

lumpy: 6 successes

amply: 6 successes

eerie: 6 successes

young: 6 successes

glyph: 6 successes

curio: 6 successes

merry: 6 successes

atone: 6 successes

Edit: I ran the same code again, this time running each simulation 10,000 times for each word. You can find the results below:

bluer: 44 successes

grown: 41 successes

motel: 41 successes

stole: 41 successes

abbot: 40 successes

lager: 40 successes

scout: 40 successes

smear: 40 successes

cobra: 40 successes

realm: 40 successes

queer: 39 successes

plaza: 39 successes

naval: 39 successes

tulle: 39 successes

stiff: 39 successes

hussy: 39 successes

ghoul: 39 successes

lumen: 38 successes

inter: 38 successes

party: 38 successes

purer: 38 successes

ethos: 38 successes

abort: 38 successes

drone: 38 successes

eject: 38 successes

wrath: 38 successes

chaos: 38 successes

posse: 38 successes

pudgy: 38 successes

widow: 38 successes

email: 38 successes

dimly: 38 successes

rebel: 37 successes

melee: 37 successes

pizza: 37 successes

heist: 37 successes

avail: 37 successes

nomad: 37 successes

sperm: 37 successes

raise: 37 successes

cruel: 37 successes

prude: 37 successes

latch: 37 successes

ninja: 37 successes

truth: 37 successes

pithy: 37 successes

spiky: 37 successes

tarot: 36 successes

ashen: 36 successes

trail: 36 successes

Conclusion

The Monte Carlo method provides a powerful and flexible approach to solving complex problems, making it an ideal tool for predicting the best Wordle guesses. By simulating multiple guessing scenarios and analyzing the outcomes, we can identify the words with the highest likelihood of being the solution. The Python script presented here leverages the comprehensive list of acceptable Wordle words from GitHub, demonstrating how statistical techniques can enhance our strategies in the game.

Of course, by looking at the list itself it very rarely would allow a player to input the top 6 words in this list and get it right. It's probalistic nature means that although it is more probable that these words are correct, it is not learning as it goes along and therefore would be considered "dumb".

Benefits of the Monte Carlo Approach

Data-Driven Predictions: The Monte Carlo method leverages extensive data to make informed predictions. By simulating numerous scenarios, it identifies patterns and trends that may not be apparent through simple observation or random guessing.

Handling Uncertainty: Wordle involves a significant degree of uncertainty, as the correct word is unknown and guesses are constrained by limited attempts. The Monte Carlo approach effectively manages this uncertainty by exploring a wide range of possibilities.

Scalability: The method can handle large datasets, such as the full list of acceptable Wordle words from GitHub. This scalability ensures that the predictions are based on a comprehensive dataset, enhancing their accuracy.

Optimization: By identifying the top 50 words with the highest success rates, the Monte Carlo method provides a focused list of guesses, optimizing the strategy for solving Wordle puzzles.

Practical Implications

The application of the Monte Carlo approach to Wordle demonstrates its practical value in real-world scenarios. The method can be implemented using Python, with scripts that read word lists, simulate guessing scenarios, and aggregate results. This practical implementation highlights several key aspects:

Efficiency: The Monte Carlo method streamlines the guessing process by focusing on the most promising words, reducing the number of attempts needed to solve the puzzle.

User-Friendly: The approach can be easily adapted to provide real-time feedback and progress updates, making it accessible and user-friendly for Wordle enthusiasts.

Versatility: While this essay focuses on Wordle, the Monte Carlo method’s principles can be applied to other word games and puzzles, showcasing its versatility.

Specific Weaknesses in the Context of Wordle

Non-Deterministic Nature: The Monte Carlo method provides probabilistic predictions rather than deterministic solutions. This means that it cannot guarantee the correct Wordle word but rather offers statistically informed guesses. There is always an element of uncertainty.

2. Dependence on Word List Quality: The accuracy of predictions depends on the comprehensiveness and accuracy of the word list used. If the word list is incomplete or contains errors, the predictions will be less reliable.

3. Time Consumption: Running simulations for a large word list (e.g., thousands of words) can be time-consuming, especially on average computing hardware. This can limit its practicality for users who need quick results.

4. Simplified Feedback Model: The method uses a simplified model to simulate Wordle feedback, which may not capture all nuances of human guessing strategies or advanced linguistic patterns. This can affect the accuracy of the predictions.

The House always wins with Monte Carlo! Is there a better way?

There are several alternative approaches and techniques to improve the Wordle guessing strategy beyond the Monte Carlo method. Each has its own strengths and can be tailored to provide effective results. Here are a few that might offer better or complementary strategies:

1. Machine Learning Models

Using machine learning models can provide a sophisticated approach to predicting Wordle answers:

Neural Networks: Train a neural network on past Wordle answers and feedback. This approach can learn complex patterns and relationships in the data, potentially providing highly accurate predictions.

Support Vector Machines (SVMs): Use SVMs for classification tasks based on features extracted from previous answers. This method can effectively distinguish between likely and unlikely words.

2. Heuristic Algorithms

Heuristic approaches can provide quick and effective solutions:

Greedy Algorithm: This method chooses the best option at each step based on a heuristic, such as maximizing the number of correct letters or minimizing uncertainty. It's simple and fast but may not always find the optimal solution.

Simulated Annealing: This probabilistic technique searches for a global optimum by exploring different solutions and occasionally accepting worse solutions to escape local optima. It can be more effective than a greedy algorithm in finding better solutions.

3. Bayesian Inference

Bayesian inference provides a probabilistic approach to updating beliefs based on new information:

Bayesian Models: Use Bayes’ theorem to update the probability of each word being correct based on feedback from previous guesses. This approach combines prior knowledge with new evidence to make informed guesses.

Hidden Markov Models (HMMs): HMMs can model sequences and dependencies in data, useful for predicting the next word based on previous feedback.

4. Rule-Based Systems

Using a set of predefined rules and constraints can systematically narrow down the list of possible words:

Constraint Satisfaction: This approach systematically applies rules based on Wordle feedback (correct letter and position, correct letter but wrong position, incorrect letter) to filter out unlikely words.

Decision Trees: Construct a decision tree based on the feedback received to explore different guessing strategies. Each node represents a guess, and branches represent the feedback received.

5. Information Theory

Using concepts from information theory can help to reduce uncertainty and optimize guesses:

Entropy-Based Methods: Measure the uncertainty of a system using information entropy and make guesses that maximize the information gained. By choosing words that provide the most informative feedback, these methods can quickly narrow down the possibilities.

Whether you're a Wordle enthusiast or a data science aficionado, the Monte Carlo method offers a fascinating glimpse into the intersection of statistics and gaming. Happy Wordle solving!

1 note

·

View note

Text

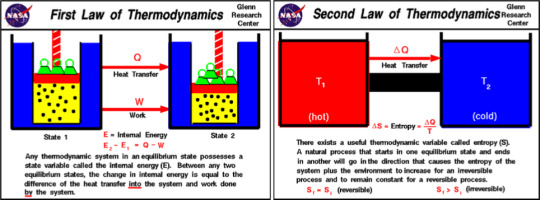

My conflict theory

Please forgive the preamble on physics. First, the three-body problem in classical mechanics. Herein, one might focus on the motion of bodies under the influence of forces, including mass and energy interactions in macroscopic systems. This entails Newton's laws, momentum, energy conservation, and mechanical equilibrium. Also, in thermodynamics and statistical mechanics, one might explore the relationship between heat, or energy, work, and the physical properties of systems at both macroscopic and microscopic levels. These then entail energy transfer, entropy, phase transitions, and the statistical behavior of particles.

Additionally, there's electromagnetism, the study of electric and magnetic fields and their interaction with matter, which involves forces, energy transfer, and the motion of charged particles.

One mustn't omit Maxwell's equations, electromagnetic waves, and energy in electromagnetic fields, either, nor relativity, which is relevant here because it deals with conflicts and reconciliations between mass, energy, and the nature of spacetime. I.e. special relativity, which points to the equivalence of mass and energy (E=mc²) and how motion affects time and space. There's also general relativity, which examines how massive objects warp spacetime, influencing the behavior of other masses and light. Nearly finally, we come to quantum mechanics, which looks at the behavior of particles on very small scales, where energy, mass, and force manifest as probabilistic phenomena, not to mention wave-particle duality, quantum states, and energy quantization.

As well, there's particle physics, which investigate the fundamental particles, such as quarks, leptons, and bosons; as well as forces that govern their interactions.

Skipping over astrophics and cosmology, as well as the materials science and solid-state physics; as well as plasma physics, we come to nonlinear dynamics and chaos theory, which study systems wherein small changes in force, mass, or energy lead to unpredictable outcomes, often modeling natural phenomena.

——————————————————————————

In all these approaches to descibing the mechanics of nature, we can't ignore the unpredictable and inherent conflict on every scale and at every stage and from every perspective when to the material, describable world. Politically, socially; interpersonally, these conflicts render our engineering of polities, societies, and utopias totally futile in the sense that they have to fail eventually because of their conditions and consequences, which are not just outside our control, but without which, they would cease to be entirely. I.e. the outskirts of the city must always crumble. The lotus of that city, however vibrant, must always evolve and adapt and will eventually die anyway. Eventually here doesn’t need mean nothing. It correctly contextualizes the assumptions about it made by its author and readers alike.

Conflict isn’t some systemic clash between the classes. It’s in your tea, the cup, the handle, the table, counter, it’s in your thumb nail; the enamel on your teeth. It’s everywhere. This is why politics can’t ever be something that satisfies everyone in any system. Not even close. This is also why governments are not so much good or bad as they are convenient or inconvenient. Our approximations of good and bad are not even uniform within our individual life-time selves, let alone within some temporal snapshot self given innumerable scales on which rest other values. Humans are just as polyvalent in those snapshot selves as they are in the album of selves that is their life. Society has agreed-upon moral approximations, but again, society isn’t uniform in these approximations. Hence the economies of convenience.

War isn’t the exception but the rule. Divorce and separation aren’t the exception but the rule. Ethics attempts to negotiate the ontology of unity. This is the role of utilitarianism and deontology, etc. Since pleasure and health and flourishing; since obligation and honor and duty are not all that circumstance can present to agency and indeed social agency thru the lens of value, these systems can only litigate to so many and for only so long before still other axiologies must be propounded, which will themselves encounter still other situations and scenarios where their jurisdictions end because of conflict.

One might wonder then, what about something that we assume stable? That lasts? The planet? The sun? Surely we can’t argue that lasting is merely perception, or that patterns aren’t patterns merely because their consequences or conditions are beyond them as patterns, you might add. That is, why do these persist without failure or death or some other form of cessatation? However, this is a misunderstanding of conflict. Conflict doesn’t mean that nothing can be quantified and as such described. Bound by condition and consequence, it means that nothing is truly free from constraint. And that, because of both or either, everything is eventually broken down or undone. Maybe it would be like saying conflict is a shaking box. Inside the shaking box, are contents that move in every direction. With enough time, these things break down because of the momentum and friction and clashing caused by the shaking of the box, whereby the contents shrink and shrink and shrink, until so far shrunk, they are beyond human perception. This can’t not happen. To everything.

These are the thermodynamics of war. This is conflict.

0 notes

Text

MIT affiliates receive 2024-25 awards and honors from the American Physical Society

New Post has been published on https://thedigitalinsider.com/mit-affiliates-receive-2024-25-awards-and-honors-from-the-american-physical-society/

MIT affiliates receive 2024-25 awards and honors from the American Physical Society

A number of individuals with MIT ties have received honors from the American Physical Society (APS) for 2024 and 2025.

Awardees include Professor Frances Ross; Professor Vladan Vuletić, graduate student Jiliang Hu ’19, PhD ’24; as well as 10 alumni. New APS Fellows include Professor Joseph Checkelsky, Senior Researcher John Chiaverini, Associate Professor Areg Danagoulian, Professor Ruben Juanes, and seven alumni.

Frances M. Ross, the TDK Professor in Materials Science and Engineering, received the 2025 Joseph F. Keithley Award For Advances in Measurement Science “for groundbreaking advances in in situ electron microscopy in vacuum and liquid environments.”

Ross uses transmission electron microscopy to watch crystals as they grow and react under different conditions, including both liquid and gaseous environments. The microscopy techniques developed over Ross’ research career help in exploring growth mechanisms during epitaxy, catalysis, and electrochemical deposition, with applications in microelectronics and energy storage. Ross’ research group continues to develop new microscopy instrumentation to enable deeper exploration of these processes.

Vladan Vuletić, the Lester Wolfe Professor of Physics, received the 2025 Arthur L. Schawlow Prize in Laser Science “for pioneering work on spin squeezing for optical atomic clocks, quantum nonlinear optics, and laser cooling to quantum degeneracy.” Vuletić’s research includes ultracold atoms, laser cooling, large-scale quantum entanglement, quantum optics, precision tests of physics beyond the Standard Model, and quantum simulation and computing with trapped neutral atoms.

His Experimental Atomic Physics Group is also affiliated with the MIT-Harvard Center for Ultracold Atoms and the Research Laboratory of Electronics (RLE). In 2020, his group showed that the precision of current atomic clocks could be improved by entangling the atoms — a quantum phenomenon by which particles are coerced to behave in a collective, highly correlated state.

Jiliang Hu received the 2024 Award for Outstanding Doctoral Thesis Research in Biological Physics “for groundbreaking biophysical contributions to microbial ecology that bridge experiment and theory, showing how only a few coarse-grained features of ecological networks can predict emergent phases of diversity, dynamics, and invasibility in microbial communities.”

Hu is working in PhD advisor Professor Jeff Gore’s lab. He is interested in exploring the high-dimensional dynamics and emergent phenomena of complex microbial communities. In his first project, he demonstrated that multi-species communities can be described by a phase diagram as a function of the strength of interspecies interactions and the diversity of the species pool. He is now studying alternative stable states and the role of migration in the dynamics and biodiversity of metacommunities.

Alumni receiving awards:

Riccardo Betti PhD ’92 is the 2024 recipient of the John Dawson Award in Plasma Physics “for pioneering the development of statistical modeling to predict, design, and analyze implosion experiments on the 30kJ OMEGA laser, achieving hot spot energy gains above unity and record Lawson triple products for direct-drive laser fusion.”

Javier Mauricio Duarte ’10 received the 2024 Henry Primakoff Award for Early-Career Particle Physics “for accelerating trigger technologies in experimental particle physics with novel real-time approaches by embedding artificial intelligence and machine learning in programmable gate arrays, and for critical advances in Higgs physics studies at the Large Hadron Collider in all-hadronic final states.”

Richard Furnstahl ’18 is the 2025 recipient of the Feshbach Prize Theoretical Nuclear Physics “for foundational contributions to calculations of nuclei, including applying the Similarity Renormalization Group to the nuclear force, grounding nuclear density functional theory in those forces, and using Bayesian methods to quantify the uncertainties in effective field theory predictions of nuclear observables.”

Harold Yoonsung Hwang ’93, SM ’93 is the 2024 recipient of the James C. McGroddy Prize for New Materials “for pioneering work in oxide interfaces, dilute superconductivity in heterostructures, freestanding oxide membranes, and superconducting nickelates using pulsed laser deposition, as well as for significant early contributions to the physics of bulk transition metal oxides.”

James P. Knauer ’72 received the 2024 John Dawson Award in Plasma Physics “for pioneering the development of statistical modeling to predict, design, and analyze implosion experiments on the 30kJ OMEGA laser, achieving hot spot energy gains above unity and record Lawson triple products for direct-drive laser fusion.”

Sekazi Mtingwa ’71 is the 2025 recipient of the John Wheatley Award “for exceptional contributions to capacity building in Africa, the Middle East, and other developing regions, including leadership in training researchers in beamline techniques at synchrotron light sources and establishing the groundwork for future facilities in the Global South.

Michael Riordan ’68, PhD ’73 received the 2025 Abraham Pais Prize for History of Physics, which “recognizes outstanding scholarly achievements in the history of physics.”

Charles E. Sing PhD ’12 received the 2024 John H. Dillon Medal “for pioneering advances in polyelectrolyte phase behavior and polymer dynamics using theory and computational modeling.”

David W. Taylor ’01 received the 2025 Jonathan F. Reichert and Barbara Wolff-Reichert Award for Excellence in Advanced Laboratory Instruction “for continuous physical measurement laboratory improvements, leveraging industrial and academic partnerships that enable innovative and diversified independent student projects, and giving rise to practical skillsets yielding outstanding student outcomes.”

Wennie Wang ’13 is the 2025 recipient of the Maria Goeppert Mayer Award “for outstanding contributions to the field of materials science, including pioneering research on defective transition metal oxides for energy sustainability, a commitment to broadening participation of underrepresented groups in computational materials science, and leadership and advocacy in the scientific community.”

APS Fellows

Joseph Checkelsky, the Mitsui Career Development Associate Professor of Physics, received the 2024 Division of Condensed Matter Physics Fellowship “for pioneering contributions to the synthesis and study of quantum materials, including kagome and pyrochlore metals and natural superlattice compounds.”

Affiliated with the MIT Materials Research Laboratory and the MIT Center for Quantum Engineering, Checkelsky is working at the intersection of materials synthesis and quantum physics to discover new materials and physical phenomena to expand the boundaries of understanding of quantum mechanical condensed matter systems, as well as open doorways to new technologies by realizing emergent electronic and magnetic functionalities. Research in Checkelsky’s lab focuses on the study of exotic electronic states of matter through the synthesis, measurement, and control of solid-state materials. His research includes studying correlated behavior in topologically nontrivial materials, the role of geometrical phases in electronic systems, and novel types of geometric frustration.

John Chiaverini, a senior staff member in the Quantum Information and Integrated Nanosystems group and an MIT principal investigator in RLE, was elected a 2024 Fellow of the American Physical Society in the Division of Quantum Information “for pioneering contributions to experimental quantum information science, including early demonstrations of quantum algorithms, the development of the surface-electrode ion trap, and groundbreaking work in integrated photonics for trapped-ion quantum computation.”

Chiaverini is pursuing research in quantum computing and precision measurement using individual atoms. Currently, Chiaverini leads a team developing novel technologies for control of trapped-ion qubits, including trap-integrated optics and electronics; this research has the potential to allow scaling of trapped-ion systems to the larger numbers of ions needed for practical applications while maintaining high levels of control over their quantum states. He and the team are also exploring new techniques for the rapid generation of quantum entanglement between ions, as well as investigating novel encodings of quantum information that have the potential to yield higher-fidelity operations than currently available while also providing capabilities to correct the remaining errors.

Areg Danagoulian, associate professor of nuclear science and engineering, received the 2024 Forum on Physics and Society Fellowship “for seminal technological contributions in the field of arms control and cargo security, which significantly benefit international security.”

His current research interests focus on nuclear physics applications in societal problems, such as nuclear nonproliferation, technologies for arms control treaty verification, nuclear safeguards, and cargo security. Danagoulian also serves as the faculty co-director for MIT’s MISTI Eurasia program.

Ruben Juanes, professor of civil and environmental engineering and earth, atmospheric and planetary sciences (CEE/EAPS) received the 2024 Division of Fluid Dynamics Fellowship “for fundamental advances — using experiments, innovative imaging, and theory — in understanding the role of wettability for controlling the dynamics of fluid displacement in porous media and geophysical flows, and exploiting this understanding to optimize.”

An expert in the physics of multiphase flow in porous media, Juanes uses a mix of theory, computational, and real-life experiments to establish a fundamental understanding of how different fluids such as oil, water, and gas move through rocks, soil, or underwater reservoirs to solve energy and environmental-driven geophysical problems. His major contributions have been in developing improved safety and effectiveness of carbon sequestration, advanced understanding of fluid interactions in porous media for energy and environmental applications, imaging and computational techniques for real-time monitoring of subsurface fluid flows, and insights into how underground fluid movement contributes to landslides, floods, and earthquakes.

Alumni receiving fellowships:

Constantia Alexandrou PhD ’85 is the 2024 recipient of the Division of Nuclear Physics Fellowship “for the pioneering contributions in calculating nucleon structure observables using lattice QCD.”

Daniel Casey PhD ’12 received the 2024 Division of Plasma Physics Fellowship “for outstanding contributions to the understanding of the stagnation conditions required to achieve ignition.”

Maria K. Chan PhD ’09 is the 2024 recipient of the Topical Group on Energy Research and Applications Fellowship “for contributions to methodological innovations, developments, and demonstrations toward the integration of computational modeling and experimental characterization to improve the understanding and design of renewable energy materials.”

David Humphreys ’82, PhD ’91 received the 2024 Division of Plasma Physics Fellowship “for sustained leadership in developing the field of model-based dynamic control of magnetically confined plasmas, and for providing important and timely contributions to the understanding of tokamak stability, disruptions, and halo current physics.

Eric Torrence PhD ’97 received the 2024 Division of Particles and Fields Fellowship “for significant contributions with the ATLAS and FASER Collaborations, particularly in the searches for new physics, measurement of the LHC luminosity, and for leadership in the operations of both experiments.”

Tiffany S. Santos ’02, PhD ’07 is the 2024 recipient of the Topical Group on Magnetism and Its Applications Fellowship “for innovative contributions in synthesis and characterization of novel ultrathin magnetic films and interfaces, and tailoring their properties for optimal performance, especially in magnetic data storage and spin-transport devices.”

Lei Zhou ’14, PhD ’19 received the 2024 Forum on Industrial and Applied Physics Fellowship “for outstanding and sustained contributions to the fields of metamaterials, especially for proposing metasurfaces as a bridge to link propagating waves and surface waves.”

#2024#Africa#Algorithms#Alumni/ae#American#applications#Arrays#artificial#Artificial Intelligence#atomic#atoms#Awards#honors and fellowships#Behavior#biodiversity#bridge#Building#carbon#Carbon sequestration#career#career development#catalysis#Civil and environmental engineering#Collective#Community#computation#computing#condensed matter#continuous#cooling

0 notes

Text

Master CUET PG Physics 2025: A Comprehensive Guide to the Syllabus

The CUET PG Physics 2025 exam offers an exciting opportunity for aspiring postgraduate students to enter prestigious universities and kick start their academic journey in physics. To excel in this highly competitive exam, a well-structured and thorough understanding of the CUET PG Physics syllabus 2025 is essential. This syllabus serves as the blueprint for your preparation, guiding you through the key topics and concepts that will be assessed in the exam.

In this blog, we will dive deep into the CUET PG Physics syllabus, explore how to download the CUET PG Physics syllabus PDF, and outline effective strategies to tackle each section, ensuring you are well-prepared for success.

Why Understanding the CUET PG Physics Syllabus 2025 is Essential

The CUET PG Physics syllabus 2025 is the foundation upon which your preparation should be built. Without a clear grasp of the topics covered, students may waste valuable time studying irrelevant content. A detailed review of the syllabus ensures that you can focus your efforts on the most important areas, allocate your study time effectively, and maximize your score in the exam.

CUET PG Physics Syllabus: Key Areas of Focus

The CUET PG Physics syllabus typically covers a broad spectrum of topics that build upon the concepts introduced at the undergraduate level. Here’s an overview of the key sections you need to focus on:

1. Classical Mechanics:

- Newton's laws and their applications

- Lagrangian and Hamiltonian formulations

- Motion of rigid bodies and the theory of small oscillations

2. Electromagnetic Theory:

- Electrostatics and magnetostatics

- Maxwell’s equations

- Electromagnetic waves and their properties

- Wave propagation in different media

3. Quantum Mechanics:

- Schrödinger’s equation and its applications

- Heisenberg’s uncertainty principle

- Quantum states, operators, and wave functions

- Perturbation theory and applications in atomic systems

4. Thermodynamics and Statistical Mechanics:

- Laws of thermodynamics

- Kinetic theory of gases

- Statistical ensembles

- Bose-Einstein and Fermi-Dirac statistics

5. Optics:

- Interference and diffraction of light

- Polarization

- Lasers and their applications

6. Nuclear and Particle Physics:

- Nuclear forces and models

- Radioactive decay and reactions

- Elementary particles and symmetries

7. Solid State Physics:

- Crystal structures and bonding

- Lattice vibrations and phonons

- Band theory and electrical properties of materials

These are the fundamental areas, but the CUET PG Physics syllabus 2025 may also include topics such as electronics, mathematical methods in physics, and more advanced quantum mechanics, depending on the university’s requirements.

CUET PG Physics Syllabus PDF: A Handy Resource for Preparation

To stay organized and ensure you don't miss any important topics, downloading the CUET PG Physics syllabus PDF is highly recommended. This document provides a detailed breakdown of all the subjects and subtopics included in the exam, offering a structured approach to your preparation.

Here’s why the CUET PG Physics syllabus PDF is an essential resource:

- Easy reference: The PDF allows you to easily access the syllabus anytime, anywhere, without needing an internet connection. You can use it as a checklist while preparing each topic.

- Focused preparation: The syllabus PDF helps you stay on track, ensuring you focus only on the relevant areas.

- Better time management: With a clear view of the syllabus, you can allocate sufficient time for each section, making your preparation more efficient.

You can download the CUET PG Physics syllabus PDF from the official CUET PG website or through your university’s admission portal.

How to Use the CUET PG Physics Syllabus for Effective Preparation

Now that you have a solid understanding of the syllabus, it’s time to plan your preparation. Here’s a step-by-step approach to mastering the CUET PG Physics syllabus 2025:

1. Break Down the Syllabus: Divide the syllabus into manageable sections. Focus on one area at a time, such as classical mechanics or quantum mechanics, and ensure you cover all the related subtopics.

2. Understand Key Concepts: Physics is a subject that requires a deep understanding of concepts. Make sure you grasp the fundamental principles behind each topic, as rote learning will not help in solving complex problems.

3. Practice Numerical Problems: Solving numerical problems is crucial for CUET PG Physics. Practice problems from each section, focusing on problem-solving techniques and improving your speed and accuracy.

4. Solve Previous Year Question Papers: Past question papers are invaluable resources for understanding the exam pattern and identifying important topics. By solving these papers, you can get a feel for the type of questions asked and the difficulty level of the exam. Incorporate previous year papers into your study plan to assess your progress and fine-tune your preparation.

5. Revise Regularly: Physics involves a lot of formulas and principles. Regular revision is essential to keep these concepts fresh in your memory. Create a revision timetable and stick to it religiously.

6. Take Mock Tests: Mock tests are an excellent way to simulate the actual exam environment. They help you improve your time management skills, build confidence, and identify areas where you need further improvement.

CUET PG Physics Exam Pattern

In addition to knowing the syllabus, it is important to familiarize yourself with the CUET PG Physics exam pattern. Here’s a brief overview:

- Type of Questions: The exam consists of multiple-choice questions (MCQs), testing both theoretical knowledge and problem-solving skills.

- Marks Distribution: Typically, each correct answer is awarded four marks, and there may be negative marking (usually one mark deducted for every incorrect answer).

- Duration: The exam is conducted over 2 hours.

Understanding the exam pattern, in conjunction with the syllabus, helps you approach the exam with a clear strategy.

Conclusion

Mastering the CUET PG Physics syllabus 2025 is crucial for performing well in the exam and securing admission to a top postgraduate program. With a clear understanding of the syllabus, access to the CUET PG Physics syllabus PDF, and a well-structured study plan, you can boost your preparation and confidence.

Be sure to focus on key concepts, practice solving problems regularly, and use previous year question papers to gauge your progress. By following these steps, you’ll be well on your way to acing the CUET PG Physics 2025 exam and achieving your academic goals!

0 notes

Text

NA62 announces its first search for long-lived particles

NA62 announces its first search for long-lived particles Probing rare particle physics processes is like looking for a needle in a haystack, but to find the needle we first need the haystack – a large amount of statistical data collected at high–luminosity experiments. The NA62 experiment, also known as CERN’s kaon factory, produces this haystack of collision data to allow physicists to study rare particle physics processes and look for weakly interacting new physics particles. The collaboration recently presented the results of its first search for long-lived new physics particles at the 42nd International Conference on High Energy Physics in Prague. “While experiments at the Large Hadron Collider are known to push the energy frontier with proton–proton collisions at the world-record energy of 13.6 trillion electronvolts, fixed-target experiments like NA62 are pushing the intensity frontier with a billion billion (1018) protons on target per year,” said Jan Jerhot, a postdoctoral researcher at the Max Planck Institute for Physics, who led the analysis of the latest NA62 results. These collisions at NA62, a fixed-target experiment, result in up to 1012 positively charged kaon K+ decays per year – a much higher luminosity than that which can be reached in collider experiments. Fixed-target approaches and collider experiments complement each other in their quest to find new physics beyond the Standard Model. The excellent energy, momentum and time resolutions of the NA62 detector make it possible to search for the rarest processes in these large datasets. The NA62 experiment operates in two modes - standard kaon mode, in which mostly rare kaon processes such as a kaon transforming into a positively charged pion and a neutrino–antineutrino pair (denoted by K+ → π+ ννbar) are studied, and a beam-dump mode, in which the proton beam from the Super Proton Synchrotron is dumped in an absorber, allowing searches for new, heavy particles with double the proton intensity compared to the kaon mode. At ICHEP, NA62 shared the preliminary results of its search for a long–lived new physics particle using the data obtained with 1.4 x 1017 protons on target from the beam–dump operation in 2021. The collaboration specifically looked for the decays of a beyond-the-Standard-Model particle into two charged hadrons, such as pions and kaons, and for the decay of neutral hadrons into photons. These are possible decays of new physics particles in beyond-the-Standard-Model theories and promising candidates to explain elusive dark matter such as axion-like particles, dark photons and dark Higgs bosons. Felix Kahlhoefer, a professor of theoretical physics at the Karlsruhe Institute of Technology, Germany, explains that, since the recent NA62 results are model-independent, the physics community worldwide can reinterpret these results to constrain many different models beyond the Standard Model. “We simultaneously obtain information for a whole class of decay channels, so it becomes possible to distinguish different models of long-lived particles beyond the Standard Model,” he said. The distance between the NA62 target and the calorimeters is more than 240 metres, making the experiment very suitable for searching for long-lived new physics particles that fly a macroscopic distance before decaying. These are especially difficult for other particle detectors at the LHC such as ATLAS (46 metres long) and CMS (21 metres long) to detect as they may not be able to see the decays of such exotic particles before they leave the detector. Exploring these uncharted territories can address some of the problems that cannot be explained by the robust Standard Model. While no evidence of a new physics signal was found in this latest analysis, NA62 has been able to exclude new regions of masses and interaction strengths in beyond-the-Standard-Model theories. Physicists at NA62 plan to study seven times more… https://home.cern/news/news/physics/na62-announces-its-first-search-long-lived-particles (Source of the original content)

0 notes

Text

The Role of AI & ML in Advancing Scientific Research and Discovery

Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing the world of scientific research. These technologies are not just buzzwords; they are powerful tools that are helping scientists make new discoveries faster and more efficiently than ever before. From analyzing massive datasets to predicting complex patterns, AI and ML are becoming essential in the advancement of science.

Speeding Up Data Analysis

One of the biggest challenges in scientific research is handling the sheer amount of data generated. Whether it's genomic sequences, climate models, or particle physics experiments, researchers are often overwhelmed by the volume of information they need to process. AI and ML are changing the game by automating data analysis. These technologies can sift through vast datasets at lightning speed, identifying patterns and insights that would take humans years to find.

For example, in the field of genomics, AI algorithms can analyze DNA sequences to identify genes associated with diseases. This rapid analysis can lead to quicker discoveries of potential treatments and a better understanding of genetic disorders.

Enhancing Predictive Modeling

Predictive modeling is another area where AI and ML are making a significant impact. Traditional models are often limited by their reliance on human-defined rules and assumptions. However, AI and ML can create models that learn from data without needing explicit instructions. This allows for more accurate predictions in complex systems.

In climate science, for instance, AI models are used to predict weather patterns and the impact of climate change. These models can analyze data from various sources, such as satellite images and historical weather data, to provide more reliable forecasts and help policymakers make informed decisions.

Accelerating Drug Discovery

The process of discovering new drugs is typically long and expensive, often taking years and billions of dollars to bring a new treatment to market. AI and ML are transforming this process by identifying promising drug candidates more quickly. These technologies can simulate how different compounds interact with biological systems, reducing the need for costly and time-consuming laboratory experiments.

In recent years, AI has been used to identify new antibiotics, antiviral drugs, and treatments for diseases like cancer. By speeding up the drug discovery process, AI and ML have the potential to save lives and bring new therapies to patients faster.

Facilitating Interdisciplinary Research

AI and ML are also breaking down barriers between different scientific disciplines. These technologies can integrate data and methods from various fields, enabling researchers to tackle complex problems that require a multidisciplinary approach. For example, AI is being used in the field of bioinformatics, where it combines biology, computer science, and statistics to analyze biological data.

This interdisciplinary approach is leading to new insights and discoveries that wouldn't be possible within the confines of a single discipline. It encourages collaboration and the sharing of knowledge across fields, further advancing scientific research.

Conclusion

Artificial Intelligence and Machine Learning are transforming the landscape of scientific research and discovery. By automating data analysis, enhancing predictive modeling, accelerating drug discovery, and facilitating interdisciplinary research, these technologies are helping scientists push the boundaries of knowledge. As AI and ML continue to evolve, their role in advancing science will only grow, leading to even more groundbreaking discoveries in the years to come.

#ai#artificial intelligence#best btech colleges in india#btech course#aimlsolutions#ai ml development services

0 notes

Text

Physics rant I didn't think would fit in a comment lol

quantum is indeed super interesting, a lot of very strange and unexpected results due to quantum effects! quantum tunnelling is one that always springs to mind -- you can observe a low percentage of particles passing through thin energy barriers in which they technically/classically don't have enough energy to escape, because the wavefunction travels through the barrier and boom: a few particles appear on the other side. and people are trying to use quantum effects for a lot of new technology -- quantum computing, sensors, encryption, etc -- and depending on the task these can be way more efficient/useful than classical methods.

yessss the schrödinger cat picture on your quantum book!! that comes from quantum particles existing in two states at once from pre-observation superposition! when there are two particles that are both in this superposition, but their chosen states are dependent on each other, that's when you get entanglement!

frictionless planes and point masses do get old very fast, though they do introduce the simplest models first so bigger systems can be built up from those! if you add up the contributions from each of the points in a given object (and you would do this adding with an integral, hence why we need all the math) we can find out a bunch of stuff about different objects -- gravitational field of different mass objects, electric field of charged objects, moment of inertia for rotating objects, etc! physics starts from very simple models/objects and principles/rules and builds up from there. in terms of motion/mechanics, high school focuses on forces acting on objects which is the Newtonian approach, but there's also Lagrangian and Hamiltonian methods which are way easier for more complicated problems -- using the Hamiltonian in particular is more complicated but has functionality for doing math in quantum mechanics!

then when we start talking about very large systems of these particles which we can only describe probabilistically (like particles in a gas at a certain temperature, pressure, etc), that gets into statistical mechanics (I remember the Hamiltonian popping up here too; also damn this class was very tough and we had the worst prof for it), and complex systems (ex. predicting weather from atmospheric data) that require computer modelling. and if we start talking about accelerating charges, that generates light, so then we start talking about electromagnetism and optics. (oh and of course if we start talking about motion near the speed of light or in very strong gravitational fields then we get into relativity, which people are still trying to unify with the quantum mechanics)

so there's a lot of 'classical'/older concepts before one even starts needing quantum but it starts to become very important for certain situations. to understand the intensity vs wavelength radiation curve of the sun for instance (the blackbody radiation curve), the classical wave model of light wasn't working and Planck in an 'act of desperation' tried modelling light in discrete packets ("quanta of light"), which worked and is how we have the model of the photon! that quantization of energy concept was basically the start of quantum physics. and if you see anything about orbital clouds of electrons (which i first saw in chemistry) those are found from the quantum wavefunction of the electron in a potential energy well of the nucleus.

your reading of quarks and nuclear forces sounds awesome!! the term color change for quarks is actually totally new to me but that sounds interesting! admittedly we didn't cover that as much in undergrad, though that is a big topic some study in a master's, a lot of research in nuclear/particle phys! (i myself am not currently in a master's but was thinking about it, still thinking about it but that's a whole other story haha)

1 note

·

View note

Text

Observing one-dimensional anyons: Exotic quasiparticles in the coldest corners of the universe

Quantum physics: Turning bosons into anyons in a quantum gas

Nature categorizes particles into two fundamental types: fermions and bosons. While matter-building particles such as quarks and electrons belong to the fermion family, bosons typically serve as force carriers—examples include photons, which mediate electromagnetic interactions, and gluons, which govern nuclear forces. When two fermions are exchanged, the quantum wave function picks up a minus sign, i.e., mathematically speaking, a phase of pi. This is totally different for bosons: Their phase upon exchange is zero. This quantum statistical property has drastic consequences for the behaviour of either fermionic or bosonic quantum many-body systems. It explains why the periodic table is built up the way it is, and it is at the heart of superconductivity.

However, in low-dimensional systems, a fascinating new class of particles emerges: anyons—neither fermions nor bosons, with exchange phases between zero and pi. Unlike traditional particles, anyons do not exist independently but arise as excitations within quantum states of matter. This phenomenon is akin to phonons, which manifest as vibrations in a string yet behave as distinct "particles of sound." While anyons have been observed in two-dimensional media, their presence in one-dimensional (1D) systems has remained elusive—until now.

A study published in Nature reports the first observation of emergent anyonic behaviour in a 1D ultracold bosonic gas. This research is a collaboration between Hanns-Christoph Nägerl’s experimental group at the University of Innsbruck (Austria), theorist Mikhail Zvonarev at Université Paris-Saclay, and Nathan Goldman’s theory group at Université Libre de Bruxelles (Belgium) & Collège de France (Paris). The research team achieved this remarkable feat by injecting and accelerating a mobile impurity into a strongly interacting bosonic gas, meticulously analysing its momentum distribution. Their findings reveal that the impurity enables the emergence of anyons in the system.

“What's remarkable is that we can dial in the statistical phase continuously, allowing us to smoothly transition from bosonic to fermionic behavior,” says Sudipta Dhar, one of the leading authors of the study. “This represents a fundamental advance in our ability to engineer exotic quantum states.” The theorist Botao Wang agrees: “Our modelling directly reflects this phase and allows us to capture the experimental results very well in our computer simulations.”

This elegantly simple experimental framework opens new avenues for studying anyons in highly controlled quantum gases. Beyond fundamental research, such studies are particularly exciting because certain types of anyons are predicted to enable topological quantum computing—a revolutionary approach that could overcome key limitations of today’s quantum processors.

This discovery marks a pivotal step in the exploration of quantum matter, shedding new light on exotic particle behaviour that may shape the future of quantum technologies.

IMAGE: Researchers inject an impurity into a one-dimensional ultracold gas, thereby generating a quasiparticle with exotic properties. Credit University of Innsbruck

0 notes

Text

Ultralight Dark Matter Detection with Superconducting Qubits

Detecting Ultralight Dark Matter

Superconducting qubit networks detect lightweight dark matter better. Research improves network topology and measurement approaches to outperform standard detection methods while supporting quantum hardware. Bayesian inference, which resists local noise, extracts dark matter phase shifts.

The enigma of dark matter continues to test modern physics, prompting research into new detection methods. A recent study describes a quantum sensor network that employs quantum entanglement and optimised measurement techniques to detect ultralight dark matter fluxes. In “Optimised quantum sensor networks for ultralight dark matter detection,” Tohoku University researchers Adriel I. Santoso (Department of Mechanical and Aerospace Engineering) and Le Bin Ho (Frontier Research Institute for Interdisciplinary Sciences and Department of Applied Physics) present their findings. They found that interconnected superconducting qubits in diverse network topologies improve detection over standard quantum protocols even in noisy conditions.

Scientists are perfecting methods to detect dark matter, a non-luminous element predicted to make up over 85% of the cosmos, despite its resistance to direct detection. A recent study offers a network-based sensing architecture that uses superconducting qubits to boost ultralight dark matter flux sensitivity to overcome single-sensor disadvantages.

This approach relies on building networks of superconducting qubits with superposition and entanglement and connecting them with controlled-Z gates. These gates change qubit quantum states to enable correlated measurements. Researchers tested linear chains, rings, star configurations, and entirely linked graphs to find the best network structure for signal detection.

The study optimises quantum state preparation and measurement using variational metrology. Reducing the Cramer-Rao constraints, which limit quantum and classical parameter estimation accuracy, is necessary. By carefully altering these parameters, scientists can explore previously unreachable parameter space and identify setups that boost dark matter signal sensitivity.

Dark matter interactions should produce tiny quantum phase shifts in qubits. Bayesian inference, a statistical method for updating beliefs based on evidence, extracts phase shifts from measurement results for reliable signal recovery and analysis. Well-planned network topologies outperform Greenberger-Horne-Zeilinger (GHZ) protocols, a quantum sensing benchmark.

Practicality is a major benefit of this strategy. Because optimised networks maintain modest circuit depths, quantum computations require fewer sequential operations. Current noisy intermediate-scale quantum (NISQ) hardware limits quantum coherence, making this crucial. The work also exhibits robustness to local dephasing noise, a common mistake in quantum systems caused by environmental interactions, ensuring reliable performance under actual conditions.

This study emphasises network structure's role in dark matter detection. Researchers employ entanglement and network topology optimisation to build scalable approaches for enhancing sensitivity and expanding dark matter search. Future study will examine complex network topologies and develop advanced data processing methods to improve sensitivity and precision. Integration with current astrophysical observations and direct detection research could lead to a complex dark matter mystery solution.

Squeezer